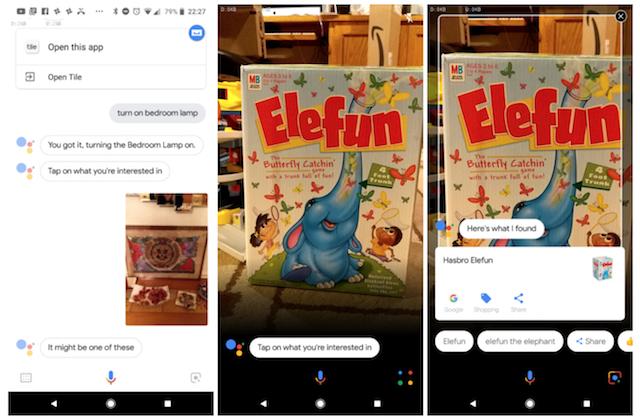

Google Lens was announced by Google at its annual I/O developers conference back in May this year. It's a new take on Google Goggles, a feature that has essentially been dead since 2014. Google Lens is capable of providing virtual contextual information about things that the handset's camera sees. The company is now rolling out support for Google Lens to its artificial intelligence-powered Assistant.

Google Lens can also be described as a camera for Google Assistant that can bring up relevant contextual information on demand. The feature is capable of identifying objects and landmarks in photos as well.

Since it's linked to Knowledge Graph, the feature can also be used to bring up information about local businesses. Google Lens is capable of pulling contact information from business cards, surfacing event information, translating text from other languages, and more as well.

None of these features are groundbreaking in the sense that it's already possible to do all of this but with different apps and services. Google Lens brings all of this together into a simple package that lives inside Google Assistant.

Users are now seeing a new Lens button in Google Assistant which opens up the camera when it's pressed. They then have to tap on the object that they want to search and Assistant then relies on Lens to come up with the relevant contextual information. It functions in a manner similar to Lens in Google Photos.

The company has previously confirmed that this feature will be exclusive to both generations of the Pixel handsets initially.

Comments